Researchers from Carnegie Mellon University and the Massachusetts Institute of Technology (MIT) have unveiled an innovative technology designed to safeguard users’ personal data when generating AI-driven content.

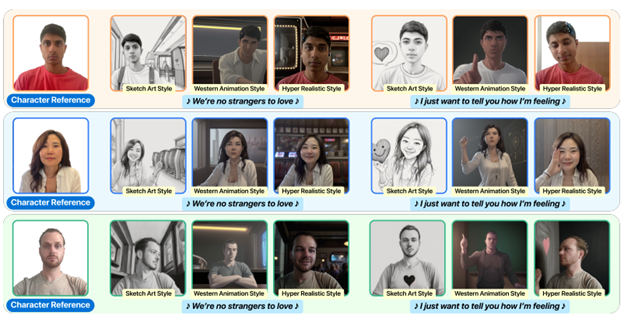

The system, known as CHARCHA (Computer Human Assessment for Recreating Characters with Human Actions), enables users to securely replicate their likeness within a neural network while addressing the risks associated with unauthorized deepfake usage.

Inspired by the CAPTCHA concept—widely used to differentiate humans from bots—CHARCHA replaces traditional text- and image-based tests with physical interactions. To verify their identity, users must perform a randomly assigned set of movements in front of a webcam, such as tilting their head, squinting, or smiling with an open mouth.

This live verification process, which takes approximately 90 seconds, analyzes these actions in real time to confirm that a genuine human is present and executing the requested movements. By scrutinizing how the tasks are performed, the system ensures that the person in front of the camera is not a pre-recorded video or a static image.

Upon successful verification, the captured images are then utilized to train the internal content generation model. This approach allows users to explicitly grant permission for their digital likeness to be recreated while maintaining full control over their personal data.

The researchers emphasized that CHARCHA was developed in response to the rapid proliferation of generative AI and the associated privacy concerns. The system empowers individuals to regulate the use of their digital identity without relying on third-party platforms or their data retention policies.

The project was presented at the NeurIPS 2024 conference, where it garnered significant interest from industry professionals. The creators of CHARCHA highlight that this method has the potential to reshape public perception of generative AI, fostering greater trust and user autonomy in the field of artificial intelligence.