OpenAI has taken decisive action to block several accounts that exploited ChatGPT to develop a potentially harmful AI-driven surveillance tool. According to researchers, this tool—designed for social media monitoring—was likely created in China and relied on one of Meta’s Llama models.

Analysis revealed that the suspended accounts leveraged ChatGPT to generate detailed descriptions and document analyses, facilitating the creation of a system that tracked real-time data on anti-China protests in the West before relaying the information to Chinese authorities. This operation, codenamed Peer Review, systematically refined and tested surveillance technologies.

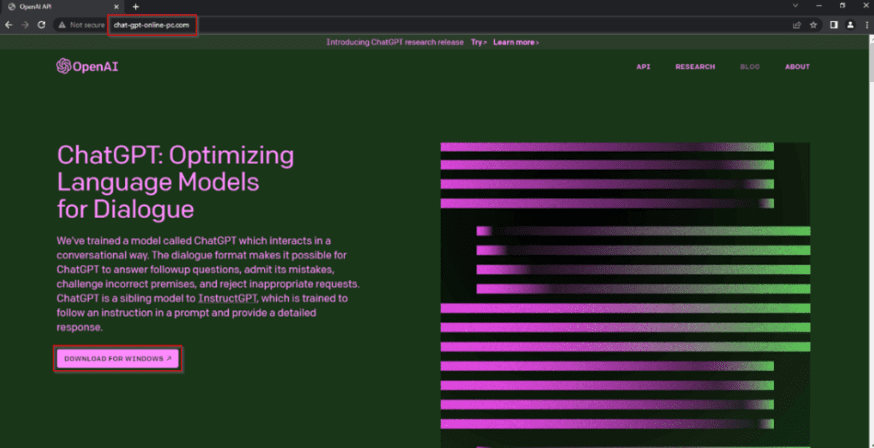

During its investigation, OpenAI discovered that ChatGPT had been used to debug and modify the source code of a system known as Qianyue Overseas Public Opinion AI Assistant. This tool monitored posts and comments across platforms such as X (formerly Twitter), Facebook, Instagram, YouTube, Telegram, and Reddit, tracking references to protest movements and other politically sensitive topics.

Furthermore, cyber actors used ChatGPT to gather open-source intelligence on U.S. think tanks, political figures in Australia, Cambodia, and the United States, as well as to translate and analyze documents related to Uyghur protests. The authenticity of some screenshots uploaded to the system remains uncertain.

Beyond this case, OpenAI also identified and dismantled several other networks engaged in illicit activities through ChatGPT:

- Fraudulent Employment Scams: A group linked to North Korea utilized AI to craft fake résumés, profiles, and job acceptance letters as part of a scheme to infiltrate IT companies.

- Propaganda in Latin America: A Chinese network disseminated anti-American articles in English and Spanish across websites in Peru, Mexico, and Ecuador, mirroring activity from the previously known Spamouflage campaign.

- Romance Scams: A Cambodia-based operation generated deceptive comments in Japanese, Chinese, and English to facilitate fraudulent investment and romance schemes.

- Pro-Iranian Influence Campaigns: A network of five accounts promoted content in support of Palestine, Hamas, and Iran, while simultaneously pushing narratives against the United States and Israel. Some accounts were involved in multiple propaganda efforts.

- North Korean Cybercrime: The hacking groups Kimsuky and BlueNoroff exploited ChatGPT to study cryptocurrency-targeted attack techniques and refine RDP brute-force scripts.

- Election Interference in Ghana: A network manipulated public discourse by publishing articles and comments on the English-language website Empowering Ghana, aiming to influence elections.

- Task-Based Fraud Schemes: A Cambodian network lured individuals into fraudulent gig economy jobs, such as like boosting and paid reviews, requiring upfront payments for participation.

This incident starkly illustrates how artificial intelligence has become an effective tool for cyberattacks, disinformation campaigns, and large-scale public opinion manipulation. A recent report by Google Threat Intelligence Group confirmed that at least 57 threat groups affiliated with China, Iran, and North Korea are already integrating AI into various attack phases, including content generation and data analysis.

OpenAI underscores that collaboration between AI companies, social media platforms, hosting providers, and cybersecurity researchers is critical to identifying threats and developing robust defenses against AI exploitation. Such coordination enables the rapid detection of malicious actors and significantly curtails their influence.