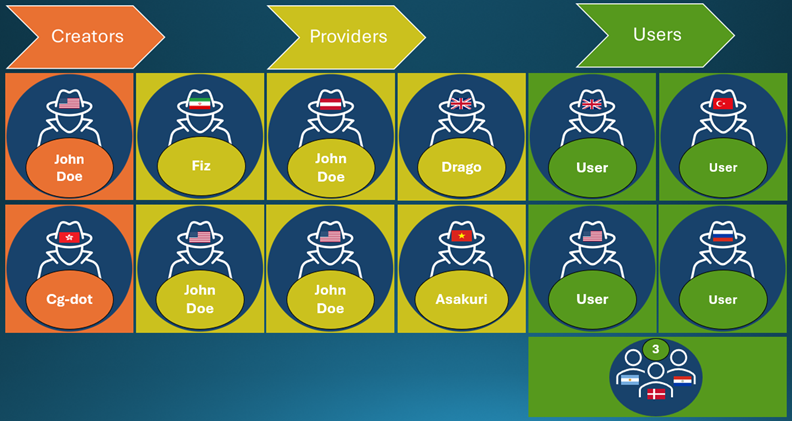

Microsoft has broadened its lawsuit against the cybercriminal syndicate Storm-2139, identifying four pivotal developers responsible for crafting tools that circumvent security controls in generative AI services, including Azure OpenAI Service. Listed among the defendants are Arian Yadegarnia (Iran), Alan Krysiak (UK), Ricky Yuan (Hong Kong), and Fat Fung Tan (Vietnam). Their software solutions enable modifications to AI functionality, facilitating the creation of prohibited content.

Storm-2139 operated under a three-tier framework: developers produced the tools, distributors marketed them with varied access levels and pricing, and end users employed these services to generate illicit material. Consequently, offenders created images—including intimate celebrity photographs—in direct violation of Microsoft’s usage policies.

Microsoft first pursued legal action in December 2024 against unnamed individuals. Through rigorous investigation, the company successfully pinpointed specific participants. During litigation, Microsoft secured a temporary injunction and shut down one of the group’s main websites, inciting internal discord within Storm-2139.

Following the release of court records, group members began “doxing” Microsoft’s attorneys, circulating their personal information. Additionally, the company received emails attempting to transfer culpability to other conspirators.

Microsoft underscores its ongoing commitment to combat the unlawful exploitation of AI, implementing heightened security measures and collaborating with law enforcement. The firm has also issued policy recommendations calling for the modernization of legislation to thwart criminals who harness generative AI technology for nefarious purposes.